The Problem with Incentives: People Are Too Smart for Their Own Good

Let’s be real — every time you design a control system, someone, somewhere, is going to figure out how to game it. It’s not a question of if but when. Whether it’s employees, suppliers, or even top management, people will optimize for whatever earns them the biggest reward with the least effort or cost.

A core challenge for any organization is designing a performance management system where employee’s personal incentives align with the company’s long-term value creation.

The underlying mechanism for this alignment depends on how design choices shape employee’s perceived rewards and effort trade-offs — ultimately influencing their decisions and behaviors.

Without even knowing, organisations often promote the wrong behaviors:

Maybe it’s a sales team stuffing the pipeline with junk leads to hit their targets. Maybe it’s warehouse staff shipping incomplete orders just to boost throughput numbers. Or maybe it’s a service team closing tickets too fast — without actually solving anything.

This isn’t just frustrating; it can quietly wreck long-term performance. When bad behaviors get rewarded, good behaviors disappear. And suddenly, you’re drowning in inflated KPIs that make everything look great—on paper.

So, how do you build a performance management system that doesn’t fall apart the moment people start looking for loopholes?

1. Be Careful What You Measure—Because That’s What You’ll Get

Here’s the golden rule: what you measure is what people will optimize for. Not what you want them to optimize for—what you actually put on the scoreboard.

If you reward speed in customer service but don’t track resolution quality, don’t be surprised when agents rush through calls and leave customers frustrated. If you set procurement targets based on cost savings alone, expect suppliers to cut corners.

The problem? Most incentive systems assume people will interpret targets in good faith. They won’t. They’ll take them literally.

The Fix: Balance Your Metrics

The trick isn’t to micromanage every possible scenario—it’s to build in counterbalances:

• Sales targets? Pair them with customer retention rates.

• Productivity goals? Weigh them against error rates.

• Cost-cutting incentives? Measure impact on quality.

If employees know they have to hit multiple (sometimes conflicting) goals, they’re less likely to game one metric at the expense of everything else.

2. Beware of Short-Term Thinking—It’s Addictive

People love quick wins. But short-term rewards often come at the cost of long-term stability.

Take a classic example: bonus structures that reward quarterly performance. Sure, they drive immediate results—but they also encourage things like:

• Revenue pulling (closing deals early just to hit targets)

• Cost deferrals (pushing expenses into the next quarter to make numbers look better)

• Slashing investments (ignoring R&D or training because it doesn’t help thisquarter’s bonus)

The result? KPIs that looks great today but slowly crumble over time.

The Fix: Reward Long-Term Success

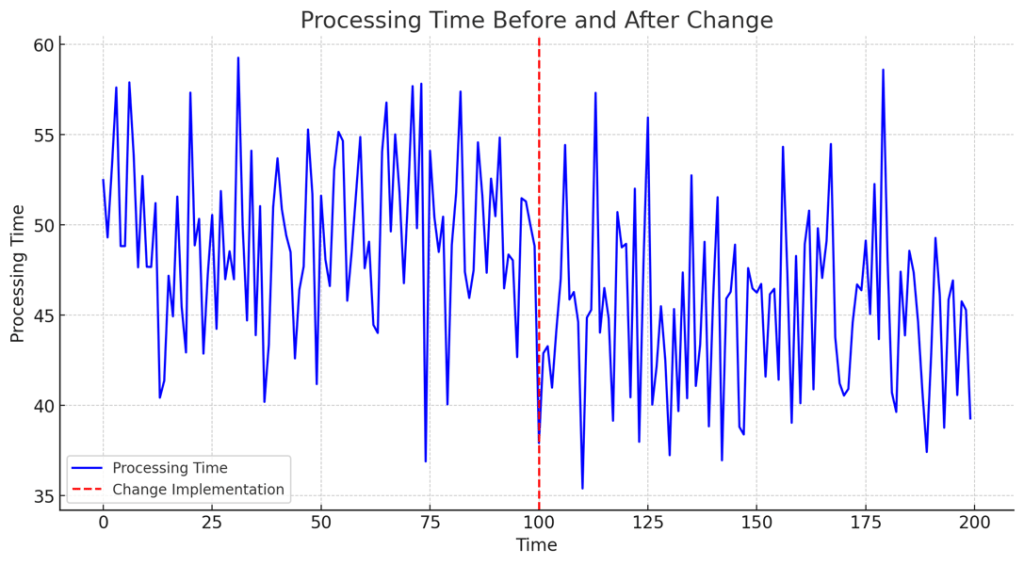

Instead of focusing only on short-term results, tie incentives to sustained performance:

• Instead of monthly sales targets, track 12-month rolling averages.

• Instead of quarterly cost reductions, measure total cost of ownership over several years.

• Instead of immediate project completion, reward teams for post-implementation success (did the process actually work six months later?).

This doesn’t mean ignoring short-term performance—it just means making sure it isn’t the only thing that matters.

3. Don’t Let People “Sandbag” Their Targets—Or Fear Success

Ever noticed how some teams always just hit their targets—but never exceed them? That’s sandbagging.

It happens when employees lowball their targets so they can hit them easily. Sales reps do it. Project managers do it. Even executives do it.

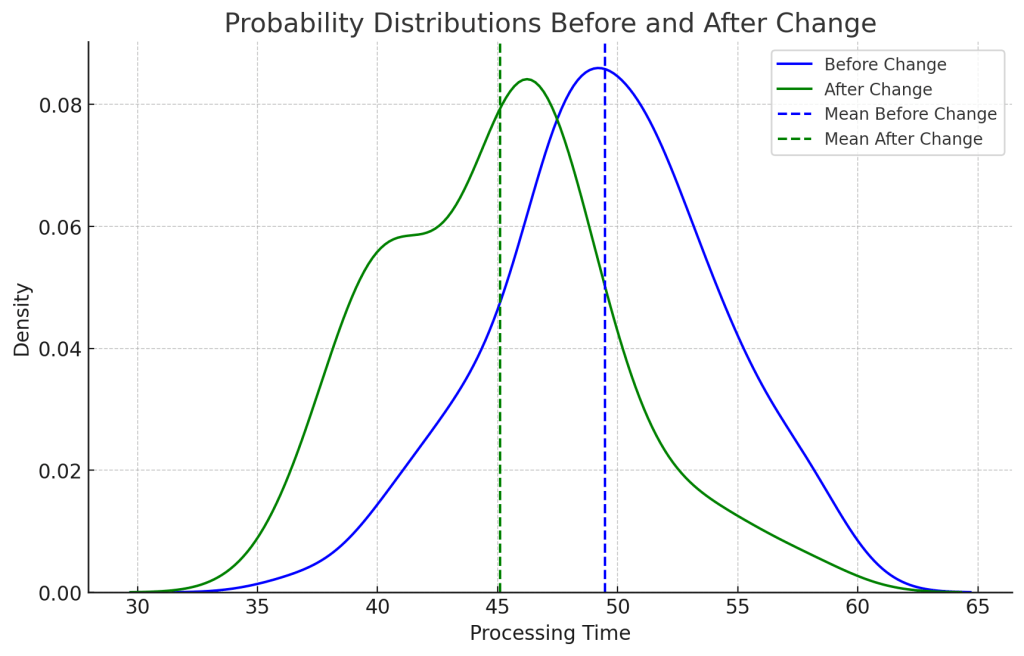

It’s understandable—if your bonus depends on meeting goals, why would you set them too high? But there’s another reason teams hold back: the ratchet effect.

When management tightens targets every time employees exceed them, they create a system where success feels like punishment. Employees quickly learn that overperformance today just means an even harder target tomorrow. Instead of pushing themselves, they start managing expectations—deliberately capping their effort to avoid setting an unsustainable precedent.

The Fix: Make Targets Ambitious—but Fair

Here’s how to stop sandbagging and prevent the ratchet effect from backfiring:

• Use historical data wisely – If a team consistently beats its targets by 20%, don’t just raise the bar without understanding why. Did they genuinely improve, or were targets too low to begin with?

• Incorporate stretch goals – Set a baseline target (minimum acceptable) and a stretch target (challenging but achievable). This encourages ambition without making employees feel trapped in an ever-rising cycle.

• Avoid automatic ratcheting – Instead of mechanically increasing targets every time they’re hit, factor in market conditions, workload capacity, and sustainability of performance.

• Recognize effort, not just output – If targets are continually raised without acknowledging the effort behind them, employees will disengage. Reward progress and long-term contributions, not just short-term spikes.

The goal isn’t just to push people to perform—it’s to make sure they don’t feel like they have to hold back just to survive.

4. Watch Out for “Perverse Incentives” (a.k.a. Manipulation Risks)

Not all gaming is intentional. Sometimes, poorly designed incentives lead to completely unexpected behaviors.

A corporate example? A company that paid call center agents based on how many calls they handled. Great—until employees started hanging up on difficult customers just to take more calls.

The Fix: Think Like a Skeptic

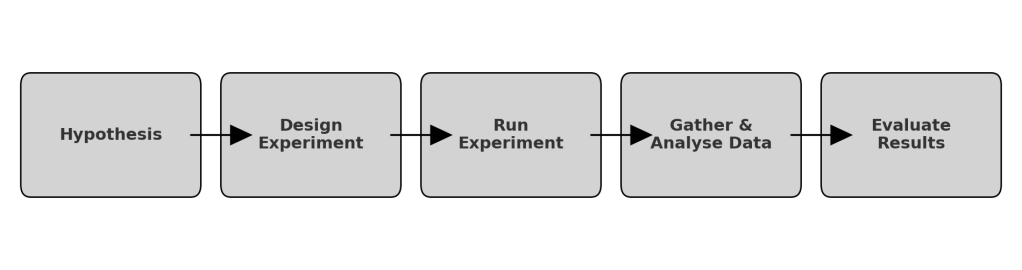

Before rolling out an incentive, ask: How could this be manipulated?

• Run small-scale pilots before full implementation.

• Get input from different departments (finance, operations, frontline employees) to spot blind spots.

• Create contingency plans—what happens if the incentive encourages the wrongbehaviors?

A little cynicism in planning can save a lot of chaos later.

5. The Best Incentives? A Culture Where People Don’t Need to Game the System

At the end of the day, no system is perfect. There will always be ways to game incentives. The real question is: why do people feel the need to do it in the first place?

Fostering the right culture and social norms is the only option that minimizes all gaming risks – with no downside attached.

If employees don’t trust leadership… If they feel like targets are arbitrary… If they think the system is rigged against them… They’ll look for ways to manipulate it.

But if they believe in the company’s goals? If they see their incentives as fair? If they trust that leadership rewards genuine success, not just numbers? They’ll be less inclined to game the system in the first place.

The Fix: Build a Performance System People Actually Respect

• Make incentives transparent—No hidden rules. No moving goalposts.

• Listen to employees—If teams say a target is unreasonable, find out why.

• Focus on purpose, not just paychecks—People work harder when they believe in what they’re doing.

The best incentive system isn’t the one with the most checks and balances—it’s the one people don’t want to cheat.

Final Thought: Don’t Blame the Players, Fix your Game

If your performance management system is constantly getting gamed, the problem isn’t just the employees—it’s the system itself.

People respond to incentives. Always have, always will. Your job isn’t to force them to behave the “right” way—it’s to design a system where the easiest way to win… is to do the right thing.

And when that happens? You won’t need to worry about gaming incentives anymore. You’ll have built a system where success actually means something